This blog is original content written for the Microsoft MVP Award Program Blog, posted here.

SQL Azure is a compelling platform for data storage. It’s cheap, reliable, and highly accessible. However, it’s not identical to SQL Server on-premise, and there are a number of things to be aware of when considering a move from one platform to the other, or simply adopting it. This article will walk through what the various SQL Azure options are, outline the processes involved in moving data form on-prem to Azure, and call out some "gotchas", or things to be aware of when operating a SQL Azure database.

COMPARISON WITH ON-PREM

Azure SQL is SQL Server behind the scenes, so most of the functionality is already there including tables, views, stored procedures, triggers, functions, primary and foreign keys and clustered indexes. Of course there is no windows authentication, and it currently uses SQL authentication only.

SQL Azure allows for logical vs physical administration. There is no to need maintain, balance, upgrade or patch the server as this is all done by Microsoft. You have no control over the physical database and log files and cannot get to them. Therefore, you cannot detach and attach databases as you cannot get to the physical drives. You also can’t reboot the server, so if you end up with a runaway query you may have to open a support ticket. Killing the session doesn’t always work.

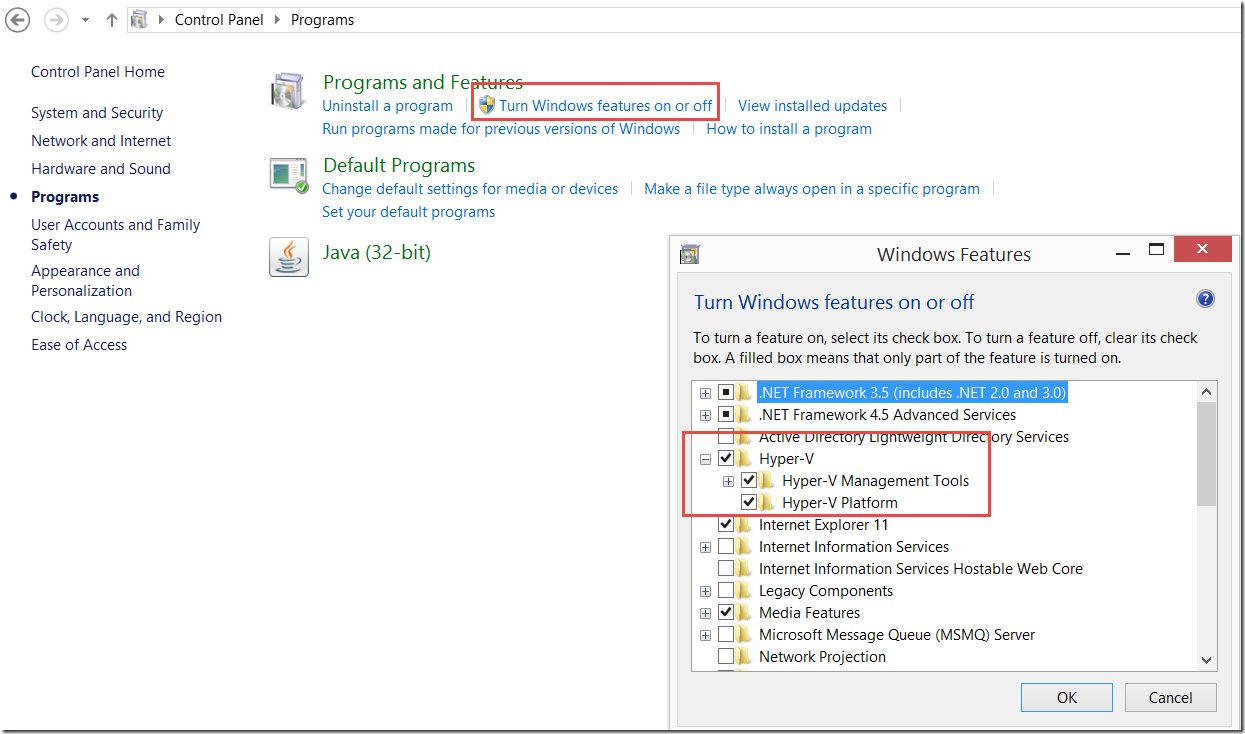

Most tools work with SQL Azure database, including:

· .NET Framework via ADO.NETC / C++ via ODBC

· Java via Microsoft JDBC provider

· PHP vis Microsoft PHP provider

· Frameworks such as OData, Entity Framework WCF Data Services, NHibernate

Your application talks to the primary database, but there are actually 3 copies kept in sync. There are always 3 copies of the database for high availability during disaster recovery. If your database should go down, one of the secondary copies will be promoted to be the primary database, and new third copy will be created.

There is a requirement for tables in a SQL Azure database to have a clustered index. This is necessary to keep the 3 copies of the database in sync.

The maximum SQL Azure database size is currently 500GB, but you can get around this using SQL federations and partitioning your data across multiple nodes.

There are a number of partially supported and unsupported features. A few of the ones I run into regularly are:

· You cannot use the USE [databasename] sql statement. You must physically switch between databases in your application.

· Remove from indexes – NOT FOR REPLICATION

· Remove from your tables – WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

You can review a full list of unsupported features here: https://azure.microsoft.com/en-us/documentation/articles/sql-database-transact-sql-information/

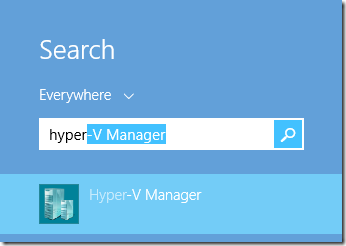

TOOLS

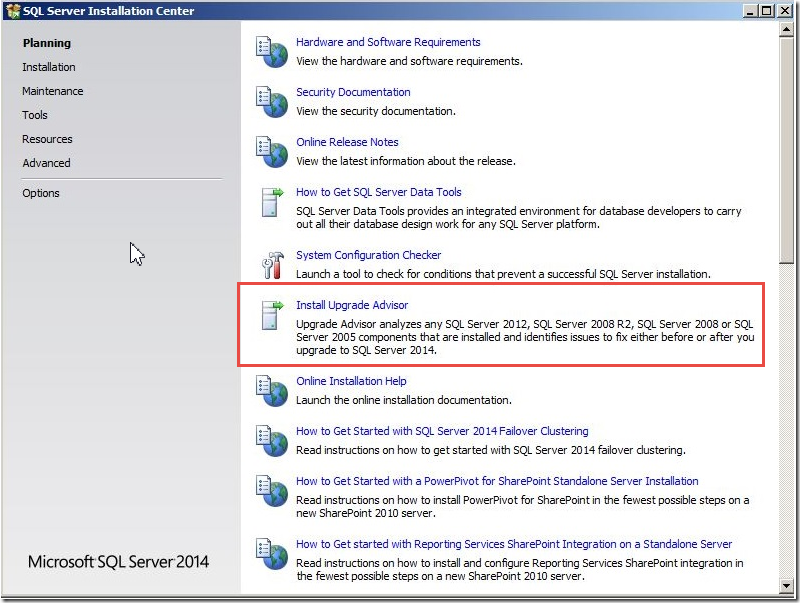

SQL Azure database does use transact SQL. There are a number of ways to connect to the database, including Windows Azure Management Portal, SSMS (2008 R2 or newer), or command line utilities such as SQLCMD and BCP. You can move data to and from your SQL Azure database using anything that can connect to the database, such as SSIS, SSMS. When it comes to migrating your database to SQL Azure there is a great article on what to consider, here: https://azure.microsoft.com/en-us/documentation/articles/sql-database-cloud-migrate/

When I migrate a database from SQL to SQL Azure, I typically follow this process using SSMS:

· Create a blank database on the SQL Azure database server

· Generate the scripts from the original database to create the database objects, excluding users

· Do a find and replace to remove any unsupported features such as the two mentioned above

· Run the create database object scripts against the new SQL Azure database

· Create the users and apply permissions for the new database

· Use SSMS or SSIS to copy the data over to the new database.

The SQL Database Management Portal is a web based, scaled down version of SSMS. You can create objects, and run queries and execution plans. But there is no GUI interface for some of the security features like creating users and logins. I find that it’s a friendlier experience to create the database server in the portal, and do everything else using SSMS.

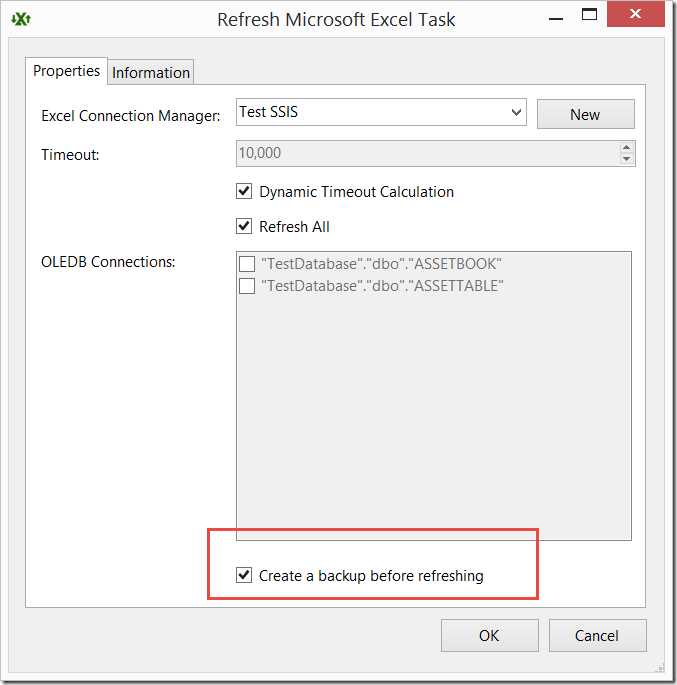

BACKUP

SQL Azure databases are protected by an automatic backup system. The length of time the backups are retained depends on what tier you buy – 7 days for Basic, 14 days for Standard and 35 days for Premium. The point-in-time restore is a self-service feature that costs you nothing unless you use it. If you use it, you pay regular rates for the new database that gets restored. You get all of the protection without any additional cost.

SECURITY

You are in complete control the IP specific access to SQL Azure Database, at both the server AND database level. No one has access by default. At the server level you go into the Management Portal and add your IP in order to be able to connect remotely to the database. Or if you prefer a more granular level of security you can add a firewall rule at the database level to allow specific IPs to connect. Yes, every time your IP changes, you have to update your firewall rules.

SERVICE TIERS AND PERFORMANCE LEVELS

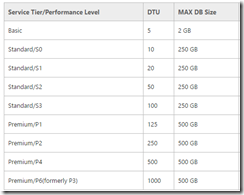

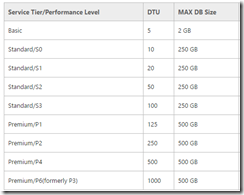

There are three tiers, with several levels of performance within them. I will summarize the Microsoft definitions.

· Basic: Best suited for a small size database, supporting typically one single active operation at a given time.

· Standard: The go-to option for most cloud applications, supporting multiple concurrent queries.

· Premium: Designed for high transactional volume, supporting a large number of concurrent users and requiring the highest level of business continuity capabilities.

Within the tiers are various performance levels. The definition of a Performance Level includes something called a Database Throughput Unit (DTU. DTUs provide a way to describe the relative capacity of a performance level of Basic, Standard, and Premium databases. DTUs are based on a blended measure of CPU, memory, reads, and writes. As DTUs increase, the power offered by the performance level increases. For example, a performance level with 5 DTUs has five times more power than a performance level with 1 DTU. There is more involved, such as maximum concurrent requests, logins and sessions, but at a high level these are the performance levels from which you can choose:

You’ll want to monitor and play with the levels to find the optimum for you needs. There is a Monitor tab for each database in the Azure Portal where you can review the performance. You can easily change the performance level on the fly. If you are anticipating heavier use, you can up the performance level for a period of time, and then drop it back down when the heavy use period is over. You only pay for the performance while it was selected.

COSTS

The pricing varies greatly depending on how many databases you have, how long they have been up, how big they are, what performance level you select, etc. Microsoft advertises no upfront cost, no termination fees, pay only for what you use, and pay by the hour.

Costs can range anywhere from $7 per month for the Basic tier, $19 – $183 per month for a 250GB database in the Standard tier, to $566 to $8500 per month in the Premium tier.

HOW TO PROVISION A SQL AZURE DATABASE

Create a Windows Azure Platform account

If you don’t already have one, you’ll need to create a Windows Azure Platform account. There is a one month free trial on offer. This will give you access to all the Azure services including VMs, SQL databases, websites, AD, etc. To sign up go to http://azure.microsoft.com/. Sign in to the Microsoft Azure Portal at https://manage.windowsazure.com.

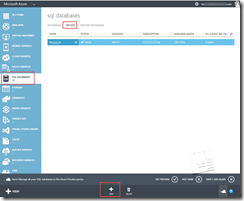

Create a Database Server

When you are ready to create a SQL Azure database, you must first add a server to your subscription on which the database will reside in the cloud. Azure will provision it to the least busy server available in the cloud. Health of physical servers are monitored and maintained by Microsoft. You don’t manage these servers yourself. Microsoft does this for you. You don’t need to wait for I.T. to provision a server for you, which could take weeks. Simply sign in, create a server and the admin login credentials, and start adding databases. You can have a server and databases set up in a matter of minutes. To automate server provisioning you can also use PowerShell or REST API calls directly.

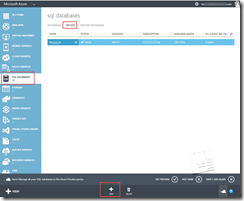

Below is an image of the Microsoft Azure Portal. Notice that we are looking at the SQL Databases, Servers tab. You can add a new server by clicking the add button at the bottom of the screen.

· Log in to Windows Azure Management Portal.

· Create a SQL database server.

· Create admin login credentials for that server. This will be used like the SA account.

· Choose the subscription to which you want to add the server, and choose region of the data center where you would like the server to reside.

· You do not get to choose the name of the server. It’s randomly generated.

· Decide if you will "Allow Windows Azure Services", the default is yes. If you were to create a Windows Azure website or cloud service, then services would have access to SQL Azure database server and databases. You can edit this setting the server Configure tab if you change your mind later.

· Click OK. The server will be created in a matter of seconds.

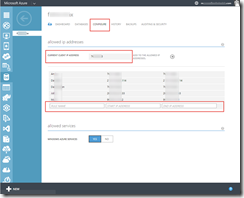

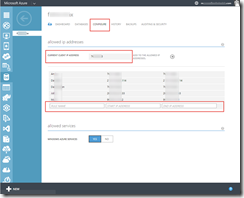

Add Firewall Rules at Server Level

Once you have created the server, you will grab the name of the server, and will likely want to connect to it using SSMS or some other tool. You will connect using the url yourservername.database.windows.net. Your connection will fail, until you add your IP address to the firewall rules.

To add firewall rules and enable service access:

· Click on the database server you created.

· Select the Configure tab.

· You will see your current IP address near the top of the screen

· Add a rule to allow the IP address range that will be connecting to the database and give it a name.

Now you can connect via automatically generated FQDN (xxx.database.windows.net) which initially contains only the master database.

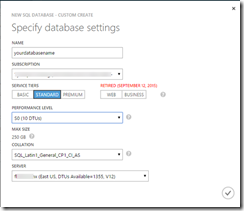

Create a Database

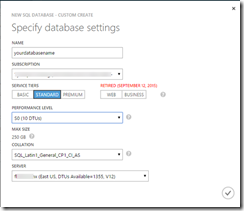

When you create a new database there a number of settings from which to choose, including Service Tiers and Performance Level.

Note: There is an option to select WEB or BUSINESS edition, but this will be retired in September 2015.

Edition: Web (select 1GB or 5GB) or Business (select 10GB to 150GB)

If you create database in SSMS, it will automatically create a 1GB web edition unless you specify otherwise. To create a database in SSMS you can run this sql script:

CREATE DATABASE <yourdatabasename> (Edition ='Business', Maxsize=150gb)

· Key in your database name

· Choose which subscription you are adding this database to

· Choose the Service Tier

· Choose the Performance Level

· Choose the Collation

· Choose on which database server you want the database to reside

Add Firewall Rules at Database Level:

You can add firewall rules at the database server level or at the database level. You can do this at the database level using the stored procedure sp_set_database_firewall_rule.

Run this SQL command against your database:

EXEC sp_set_database_firewall_rule N'MyRule', '100.100.1.1','100.100.1.1'

To look up existing database firewall rules use this query:

SELECT * FROM sys.database_firewall_rules

There are a number of differences and adjustments you may need to make to move to SQL Azure, from unsupported SQL features to managing firewall security. If these are not an issue for you, there is great opportunity to leverage the ease, scalability and reliability of SQL Azure databases. You pay only for what you use and someone else manages the server maintenance, upgrades and backups. It’s a sweet deal.

![image_thumb[3] image_thumb[3]](/wp-content/uploads/2015/05/image_thumb3_thumb.png)

![image_thumb[6] image_thumb[6]](/wp-content/uploads/2015/05/image_thumb6_thumb.png)

![image_thumb[7] image_thumb[7]](/wp-content/uploads/2015/05/image_thumb7_thumb.png)

![image_thumb[9] image_thumb[9]](/wp-content/uploads/2015/05/image_thumb9_thumb.png)

![image_thumb[11] image_thumb[11]](/wp-content/uploads/2015/05/image_thumb11_thumb.png)